The goal of this lab is to give us an introduction to a preprocessing method called geometric correction. Geometric correction is required to properly align and locate an aerial image. Aerial images are never perfectly inline due to a multitude of factors which alter the angles of the image. Within this lab exercise we will explore and practice two different methods of geometric correction.

Rectification is the process of converting a data file coordinate to a different coordinate or grid style system known as a reference system.

1. Image-to-Map Rectification: This form of geometric correction utilizes a map coordinate system to rectify/transform the image data pixel coordinates.

2. Image-to-Image Rectification: This form of gemteric correction uses a previously corrected image of the same location to rectify/transform the image data pixel coordinates.

Methods

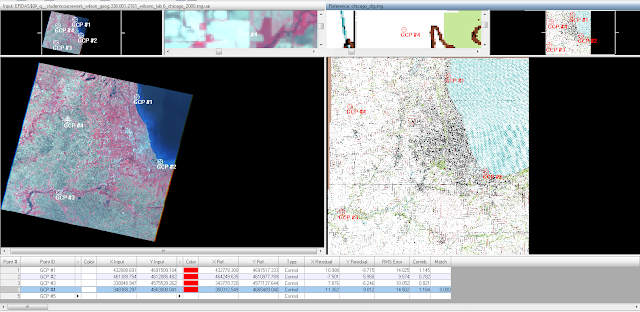

The first method I explored in the lab was Image-to-Map-Rectification. For this exercise we used a USGS 7.5 minute digital raster graphic (DRG) covering a portion of Chicago, Illinois to geometrically correct a Landsat TM image of the same area (Fig.1). I will be performing this task in Erdas Imagine 2015.

|

| (Fig. 1) The USGS DRG is on the left and the uncorrected image of Chicago area is on the right |

I will be utilizing the Control Points option under the Multispectral tab in Erdas to perform the geometric correction. After opening the Control Points option I set the Geometric Model to Polynomial and used the first order polynomial equation per directions from my professor. I also set the USGS DRG map as the reference image.

To correct the image I will be placing ground control points (GCP) on both maps in the same locations using the "Create GCP" tool within the correction window. At this extent in (Fig. 1) it is hard to be super precise when placing GCP's so after they are placed you can zoom in and adjust them to have a more precise location. When placing GCP's you want to use fixed locations like "T" intersections of roadways or permanent buildings which have been in the area for a long time. It is not advisable to used features such as lakes or rivers as they change over time and their locations may be different from image to image. The accuracy between my GCP points is automatically calculated by Erdas in Root Mean Square (RMS) error. The industry standard in remote sensing is .5 RMS error or below. For this first exercise I was only required to reach an RMS error of 2 since this was my first attempt of geometric correction.

I place four GCP's per my instructions in the general area requested by my professor (Fig. 2). I zoomed in and continued to adjust the points till I was able to achieve a .2148 RMS error, well below the industry standard. There was very minimal error in the image we corrected, so displaying the correction is very difficult. In the next correction process you will be able to see and visible change from the original image to the corrected image.

|

| (Fig. 2) First placement of the four GCP's for geometric correction. |

|

| (Fig.3) Zoomed in view while adjusting the GCP locations more precisely. |

The second method of Image-to-Image Rectification was explored using two satellite images of Sierra Leone. The process of Image-to-Image Rectification is exactly the same as the Image-to-Map method except you are utilizing an image which has been previously corrected as your reference.

The settings for the Control Point tool were all the same with the exception of changing from the first order polynomial to the third order polynomial. This setting adjustment will require more GCP's to geometrically correct the image. The additional GCP's will add to the precision of the correction. I was instructed to place 12 GCP's on the image in specific locations provided to me by my professor.

|

| (Fig. 4) The two images displayed using the Swipe tool showing the error in the bottom (uncorrected) image. |

After performing the same opperation as the previous image I adjusted all 12 of the GCP's until my RMS error was .1785. I used bilinear interpolation to resample the image and export it as a new file. The next step was to bring in the resampled image and compare it to the reference image to inspect the accuracy of my geometric correction. The alignment was perfect as expected. The corrected image appears hazy but a simple image correction operation in Erdas would remedy the issue.

|

| (Fig. 4) The corrected image (top) aligned with the reference image (bottom). |

Results

This lab exercise gave me a basic understanding of geometric correction. Having images which are geometrically correct is essential for proper and accurate analysis. Geometric error may not always be visible to the human eye, however all images should be checked and then corrected for any errors before analysis is completed. Locating good areas for GCP location is something which takes a bit of practice which was effectively translated in this lab.

Sources

All images were acquired from the United States Geologic Survey (USGS).